I come across this video about the use of RFID bracelet to track visitors to a CocaCola event with Facebook integration.

Face Tracker in OSX

This video is the test run of the Face Tracker code by Jason Saragih. I compile and run it in OSX 10.7 with OpenCV 2.3.

Since Xcode will build the product into the user’s Library folder, I have to put the face model information in the product folder. In OSX 10.7, the Library folder in hidden. I have to unhide it by

chflags nohidden ~/Library/

OpenNI in Processing: simple-openni

I just found this very useful Processing wrapper for OpenNI. It included a number of useful functionalities in OpenNI.

OpenNI in Processing – User Tracker

The second example from the Java binding of OpenNI, the User Tracker.

It crashes when I run it in the 64bit OSX environment. For the demo, I run it in a 32bit Windows 7 machine.

OpenNI and Processing

Based on the latest Java binding of the OpenNI, I put the SimpleViewer sample into a Processing sketch. The trick is to convert the BufferedImage into a PImage object for display.

I did testing with another sample – UserTracker. It seemed that it can only run in 32 bit environment, not the 64 bit.

Kinectfusion

This is one of the hottest videos from Microsoft Research in this years’ SIGGRAPH presentation.

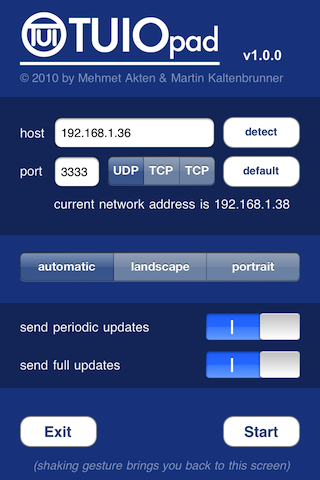

TuioPad and Pure Data

It is a very simple experiment with the free iPhone app tuiopad.

The app routes the multitouch information from the iPhone to a host through the open sound control communication protocol. I send the TUIO multitouch signals to a host running a Pure Data patch to display the co-ordinate in a GEM window.

The Pd patch

There are other commercial products with more sophisticated features: OSCemote and TouchOSC.

Cocoa Capture and Display

The last example directly preview the camera image. In this example, I store the frame image and re-display it again using an OpenGL view. There is no QTCaptureView in the window.

The major reference is the open source Cocoa based OpenCV experiment – CVOCV.

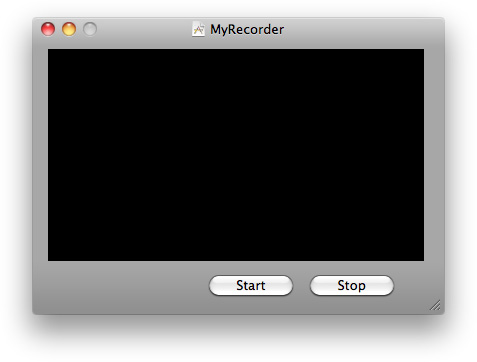

Cocoa Video Capture

The major references for video capture in Cocoa are the QTKit Application Programming Guide, and the QTKit Application Tutorial. They have the basic examples to display the camera view in the Cocoa window.

The Simple QTKit Recorder Application is the starting point. I’ll further simplify it to remove the two buttons. Here is the layout sketch from the original sample.

Here is the XCode 4.0 project folder.

The header file

#import <Foundation/Foundation.h> #import <QTKit/QTKit.h> @interface MyRecorderController : NSObject { @private IBOutlet QTCaptureView *mCaptureView; QTCaptureSession *mCaptureSession; QTCaptureDeviceInput *mCaptureDeviceInput; } @end |

The objective-c file

#import "MyRecorderController.h" @implementation MyRecorderController - (id)init { self = [super init]; if (self) { // Initialization code here. } return self; } - (void)dealloc { [mCaptureSession release]; [mCaptureDeviceInput release]; [super dealloc]; } - (void)awakeFromNib { mCaptureSession = [[QTCaptureSession alloc] init]; BOOL success = NO; NSError *error; QTCaptureDevice *device = [QTCaptureDevice defaultInputDeviceWithMediaType:QTMediaTypeVideo]; if (device) { success = [device open:&error]; if (!success) { NSLog(@"Device open error."); } mCaptureDeviceInput = [[QTCaptureDeviceInput alloc] initWithDevice:device]; success = [mCaptureSession addInput:mCaptureDeviceInput error:&error]; if (!success) { NSLog(@"Add input device error."); } [mCaptureView setCaptureSession:mCaptureSession]; [mCaptureSession startRunning]; } } - (void)windowWillClose:(NSNotification *)notification { [mCaptureSession stopRunning]; [[mCaptureDeviceInput device] close]; } @end |